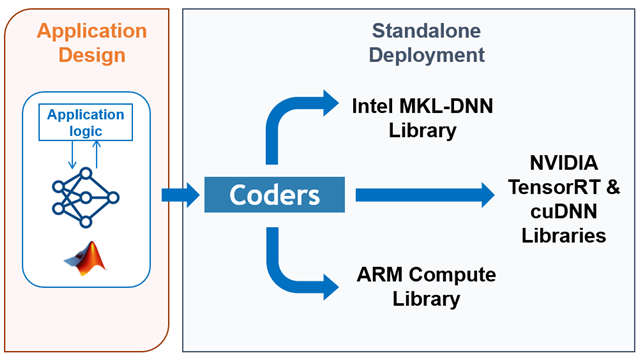

Designing deep learning and computer vision applications and deploying to embedded GPUs and CPUs like NVIDIA Jetson and DRIVE platforms is challenging because of resource constraints inherent in embedded devices. A Simulink-based workflow facilitates the design of these applications, and automatic generated C/C++ or CUDA® code can be deployed to achieve up to 2X faster inference than other deep learning frameworks.

This webinar walks you through the workflow. Create an automotive lane and vehicle detection application using a YOLO v2 network in Simulink running on the CPU, test it on the same desktop using a Titan V GPU, then deploy it onto a Jetson AGX Xavier. Design and deploy deep learning networks for pedestrian detection, blood smear segmentation, and defective product detection to either an Intel Xeon processor on a desktop, ARM Cortex-A processor on a Raspberry Pi, or an NVIDIA Jetson AGX Xavier. Learn how to access peripherals from the Jetson platform for use in Simulink and with the generated code. Finally, hear about optimizations applied to the generated code that help it achieves up to 2X faster inference than other deep learning frameworks.

Please allow approximately 45 minutes to attend the presentation and Q&A session. We will be recording this webinar, so if you can't make it for the live broadcast, register and we will send you a link to watch it on-demand.

Bill Chou is the Product Manager for MATLAB Coder and has been working with MathWorks code generation technologies for 15 years. Bill holds an M.S. degree in Electrical Engineering from the University of Southern California and a B.A.Sc degree in Electrical Engineering from the University of British Columbia.